Why AI Is Changing SEO Forever

For a long time now, traditional SEO has been based on optimizing for keywords, building backlinks, and creating content that gets a high rank on the top 10 search page. But the generative ai takeover of the model is now becoming obsolete.

The SEO game is no longer about winning a click. It's about earning a citation.

AI search engines and agents are changing not just how users find information, but what they expect from it, and this will now demand a new and more holistic but integrated approach to SEO.

Marko Miljkovic, Veza Digital SEO manager and SEO expert, explains that as we move into 2026, AI-first search is completely changing how brands are discovered and trusted. Traditional SEO focused on visibility, but AI SEO is about eligibility.

It’s about making sure machines can understand, trust, and reference your content.

The biggest change is in user intent. People using LLMs like ChatGPT or Perplexity aren’t browsing just for options; they’re looking for a clear solution. That makes AI-driven search far more decisive and conversion-oriented than traditional SERPs.

As AI Overviews evolve into full AI Modes, brand strength is becoming one of the most important ranking factors. If your brand isn’t recognized, cited, or trusted, it won’t appear in AI-generated answers.

That’s why evergreen native PR and listicle placements are now essential. Building backlinks from credible news domains is the most effective long-term strategy for both Google and AI visibility since those sites are crawled more frequently by AI models.

On the content side, depth, readability, and semantic structure are what truly matter. LLMs don’t reward keyword density anymore. They value clarity, context, and strong entity relationships.

The best-performing pages are written like lessons: structured, comprehensive, and easy for both humans and machines to understand.

AI SEO today is less about chasing algorithms and more about teaching machines who you are, what you do, and why you can be trusted.

From Keywords to AI-Driven Search

As we mentioned, traditional SEO was based on a simple keyword-matching system. A person typed in a few words, and the search engine looked for web pages with content that included those exact words.

Copywriters and content creators are mainly focused on which keywords to use, how often to use them, and where to put them.

Now with ai SEO, smart search engines that now look for information requested from queries using artificial intelligence don't just match keywords, they truly understand the meaning, context, and the user's underlying goal (what the person is really looking for).

Then combine information from many different places to create a single, comprehensive answer.

This now completely changes the goal of your content reaching people. It is no longer just a separate page competing for a click. It now becomes a high-quality piece of data that the ai can use to construct a detailed answer to a complicated question.

Generative AI changes the user's journey, users are now engaging in a "prompt and converse" journey. Here is the breakdown for marketers to better understand.

- Fewer Clicks, More Answers

AI Overviews in Google SGE or the direct answers from Perplexity will provide a complete solution directly on the results page. This now leads to a jump in "zero-click searches," where the user's question is answered without them ever visiting a website.

- Conversational & Multi-Turn Queries

Users are moving from short, transactional keywords to full-sentence questions or voice search queries. They also ask follow-up questions which creates a conversational loop. The ai remembers the context of the entire conversation, making each subsequent response more personalized and relevant.

- Influence vs Traffic

The new metric for success is now citation share. This means how often your website is recognized as a source in an ai-generated answer. Brands that are frequently referenced build authority and trust, even if the user never clicks through to their site. The goal goes from attracting a click to influencing the ai’s response.

Why SEO Will Still Exist in 5 to 10 Years (But Look Very Different)

Despite these massive ai changes, SEO is not dead, it is evolving. The core purpose of SEO—making information findable and understandable—remains essential. However, the tactics and strategies will look very different.

- Switch From Volume to Authority

AI-generated content is not going to make the cut any longer, it will bring down the value of your content. The human touch is where the real value is at. The E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) framework will become the most important factor for visibility, this will shape how effective your content will be.

- Optimizing for Machines AND Humans

The future of ai SEO is going to be based on a dual strategy. Marketers will need to create content that is not only interesting for a human reader but also highly structured and ai machine-readable for ai crawlers.

- New Technical Optimization

SEO professionals and marketers will need to learn and master new technical optimizations like LLMs (Large Language Models) APIs (Application Programming Interfaces), and advanced structured data to directly communicate with ai agents and search engines.

- AI Agents

Beyond search engines, our experts are seeing the rise of autonomous ai agents that perform multi-step tasks for users. For example, an ai agent might plan a trip by booking flights, finding a hotel, and researching restaurants. Optimizing for these agents requires a whole new approach to visibility. The brands that are best prepared for this shift will be the ones that succeed.

Veza Digital and our SEO experts understand that this is the future. Yes, we apply Webflow and SEO strategies to help brands adapt, thrive, but now with a new ai SEO first approach, this elevates our clients' marketing efforts to new heights of growth.

Traditional SEO vs AI-First SEO

The core principles of SEO still remain visibility, authority, and user intent but the execution has changed, and this means that what worked in the past is no longer enough. Here's a breakdown of the key differences between traditional and ai SEO.

Speed and Simplicity Matter More Than Ever

Before, most marketers and content writers would create 3,000 word articles that were keyword soaked, and this would be at the center of an SEO content strategy.

Today, ai-driven search engines and agents prioritize speed and clarity. They want to get to the answer quickly. Content that is not natural, repetitive, or hidden behind layers of fluff is less likely to be used.

AI SEO content that is human-written, concise, and to the point is a goal because it is seen as a source for an AI to pull from.

To ensure maximum impact and efficiency, marketers should structure their content to be instantly accessible. Content writers address every major discussion point with a clear, direct answer to the implied question at the top of the section.

Organize the material with a logical structure, using descriptive headings (H2 and H3) that clearly signal the purpose of the content beneath them.

Most importantly, marketers need to make content scannable for both human readers and search engines by constantly using formatting tools such as bullet points, numbered lists, and bolded text to highlight and present key information.

Structured, Semantic Text Wins Visibility

Traditional SEO focused on keywords and internal linking to establish relevance. AI search optimization takes this a step further by requiring a focus on semantic meaning and relationships between entities.

AI systems, especially those using Retrieval-Augmented Generation (RAG), rely on a deep

understanding of concepts. They don't just see a page; they see a web of interconnected entities.

By structuring your content semantically—meaning you explicitly define the relationships between your brand, products, services, and key concepts—you make it easier for the AI to "connect the dots" and choose your site as a source. This is about building a digital knowledge graph for your brand.

Metadata and Schema Carry More Weight

For decades, metadata was about giving search engines clues about your page.

Now, it's a direct conversation with AI. While a traditional meta description might be a punchy call-to-action, an ai-first meta description should be a concise summary that helps the AI understand the page's core purpose.

Similarly, structured data (Schema.org markup) is no longer a "nice-to-have" for rich snippets. It's a foundational element of AI SEO.

It gives AI crawlers a machine-readable data layer, telling them exactly what each piece of content represents.

Blocking Crawlers Makes You Invisible to AI Search

Before, some websites used the robots.txt file to stop search engine crawlers from looking at pages they thought were unimportant.

However, this is risky. Why? Because robots.txt just tells crawlers to stop, it does not explain what the content is or why it's not valuable.

The new proposal, llms.txt, fixes this problem. Instead of blocking, it's a new protocol designed for Large Language Models (LLMs) and ai agents.

The llms.txt file gives ai a structured summary or map of your site's content, allowing you to directly guide the models toward your most relevant information tot he topic. It moves the strategy from a simple to an intelligent direction.

When marketers provide a clean, prioritized sitemap and descriptions in llms.txt, they tell ai agents exactly what information is most important on your site.

For Veza Digital, this is a key part of our ai optimization checklist. Our experts ensure your content is not just accessible, but understandable and prioritized for the ai.

AI Training vs. AI Search - What’s the Difference?

This is a critical difference that many marketers miss.

- AI Training is the process of a large language model learning from a massive, static dataset. It's how the model builds its core understanding of language, facts, and concepts. Once trained, the model’s knowledge is fixed until the next major update.

- AI Search is the live, real-time application of that trained model. When a user asks a question, the AI uses its core knowledge to understand the intent, then retrieves fresh, real-time information from the web via (ai crawlers like GPTBot and Google-Extended) to generate a response.

An ai SEO strategy is all about influencing the AI search process. While marketers have little control over the training data, they have full control over how a site is structured for real-time retrieval.

The optimizations we've mentioned/discussed - speed, simplicity, structured data, and llms.txt - are all geared towards making your site a primary source for that real-time ai search.

AI Optimization Checklist (TL;DR for Busy Teams)

For teams focused on growth, startups, and marketers, the move to AI SEO can feel overwhelming. This checklist is a practical roadmap to ensure a site is optimized for the future of search.

1. Metadata Best Practices

- Title Tags

Marketers want to write descriptive titles that clearly state the page's core topic. Focus on user intent and include key concepts, not just keywords.

- Meta Descriptions

Create concise, summary-style descriptions (under 160 characters) that act as a clear, one-sentence answer to a potential query.

2. llms.txt Setup

- Create the File

Place an llms.txt file at the root of your domain (yourdomain.com/llms.txt).

- Define Crawl Instructions

Use the file to specify which ai models (user-agent: ChatGPT-User) can and cannot crawl certain parts of your site. This is where you can proactively guide ai agents to your most valuable, authoritative content.

- Provide Structured Summaries: Include a block of structured data that summarizes your key site sections, giving ai agents a pre-digested view of your content.

3. ai-Friendly robots.txt

- Audit and Review

Re-evaluate your existing robots.txt file

Ensure you are not blocking legitimate ai crawlers like Google-Extended, PerplexityBot, or GPTBot. These crawlers are key for your visibility in ai generated answers.

- Remove Unnecessary Disallows

If your robots.txt has a blanket Disallow: /, you are telling ai search to ignore your entire site. Remove or modify this to allow access to your public content.

4. Structured Data and Semantic Markup

- Schema for Entities

Use Schema.org markup to define key entities on your site. The Organization, Person (for authors), and Product or Service pages. This helps AI understand who you are and what you do.

- Use Specific Schemas

Implement specific schemas like How to, FAQ page, etc, to clearly label content. This is a direct signal to the AI that this content is a perfect source for a specific type of query.

5. Clean HTML and Fast Load Speed

- Semantic HTML

Use proper HTML tags (<p>, <ul>, <h2>, etc.) to create a clean, logical document structure. Avoid using CSS for structure, as ai crawlers read the raw HTML.

- Core Web Vitals

Ensure your site loads quickly on both desktop and mobile. Fast loading times (low LCP, FID, and CLS scores) are a signal of a high-quality user experience, which is a factor ai models consider when evaluating sources.

6. Indicating Freshness (Last Updated Tags)

- Display Dates

Clearly display "Last Updated" dates on your articles and guides. For highly volatile topics like "Best AI SEO Tools 2026, this signals to the ai that the information is current and trustworthy.

- Use Schema

Use the dateModified property in your Schema markup to provide a machine-readable last-updated date.

7. Accessible APIs, RSS, or Feeds

- Direct Data Access

Enterprises and SaaS companies should consider creating a public-facing API that allows AI agents to directly query their product data, knowledge base, or FAQs. This is the ultimate form of ai search optimization.

- Feeds for Agents

Provide a clean, structured RSS or Atom feed that allows agents to subscribe to and get real-time updates on your content.

8. Unified Content (Avoid Fragmenting Across Multiple Pages)

- Consolidate Information

Instead of having five different blog posts on slightly different aspects of a topic, create one comprehensive, authoritative guide. AI agents prefer a single source of truth that covers a topic in depth rather than having to stitch together information from multiple fragmented pages. This is the future of ai SEO.

Key Optimizations for AI Search Visibility

To achieve success now, it goes beyond just creating content, it's about making that content technically accessible, understandable, and trustworthy to machines. Here are the critical optimizations for gaining visibility in ai-first search.

Configure robots.txt for AI Crawlers

To better prepare your website for the current era of AI, you need to change how you think about your robots.txt file.

Why the Old Way is Outdated

In the past, people used robots.txt primarily to block standard search engine crawlers (like Googlebot) from "low-value" or duplicate pages to save crawl budget.

The new focus must be on allowing the specific crawlers that feed generative ai systems. If an AI crawler is blocked, your content cannot be used to generate AI Overviews, generative answers, or other AI-powered search features.

The AI-First Approach

Robots.txt is your first point of contact with any bot. To ensure a site is a potential source for ai -generated content, you must check that your robots.txt file does not block these key ai-specific bots, known as User-agents.

The example configuration ensures your site is widely accessible to all crawlers (User-agent: * / Allow: /) while being extra clear that these crucial AI crawlers are welcome. This moves the strategy from blocking to guiding and allowing ai to find and synthesize any public-facing information.

Set Up an llms.txt File (What It Is and Why It Matters)

.jpeg)

llms.txt is the new standard designed specifically for Large Language Models (LLMs). Unlike robots.txt, which tells a crawler what it can't access, llms.txt tells an LLM what content on your site is most important. It's a structured file that acts as a prioritized table of contents for ai.

This matters because llms.txt allows marketerts to proactively guide AI agents to the most authoritative, up-to-date, and important content. You can use it to:

- Summarize Key Pages - Provide a brief, machine-readable summary of your most valuable content.

- Highlight Important URLs - Showcase lists like money pages, core guides, and essential resources.

- Define Access Policies - Specify how an ai agent can use your content (for search synthesis, but not for training data).

Setting this up is a key part of your ai optimization for SEO strategy and positions a brand as a clear, trusted source.

Optimize for Site Speed and Performance

AI crawlers, like human users, have only so much patience. If a site is slow to load, a crawler may time out before it can fully access and understand its content. This leads to incomplete indexing and can hurt any chances of being a cited source. Here is what to do:

- Core Web Vitals - Markets need to focus on improving the Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS).

- Clean Code - Minimize JavaScript, CSS, and other render-blocking resources. Marekterts need to use clean, semantic HTML that is easy for a machine to parse.

Use Clean Metadata, Schema, and Semantic Markup

Metadata and Schema are the new keyword strategy for ai.

- Clean Metadata - Write clear, concise meta titles and descriptions that summarize your content's main purpose.

- Schema Markup - Implement Schema.org markup using JSON-LD. This is crucial for defining entities and relationships. Use schemas for articles, FAQs, HowTo, Product, and Review to tell the ai exactly what the content is about.

- Semantic Markup - Marketers need to use proper heading structures (h1 through h6) and logical semantic tags (<article>, <section>).

Keep Content Unified and Crawlable

AI agents and search engines are optimized to find a complete answer in one place, not piece information together from several different locations.

They prefer a single, comprehensive source of truth over an array of patched short articles.

For content to perform wel,l marketers must avoid fragmentation by keeping related ideas, data, and topics on one authoritative page instead of spreading them across multiple, thin posts.

A well-organized site also requires clear internal linking. This means creating a logical, organized system of links that guides ai crawlers and human users easily from one piece of related content to the next.

When content is consolidated and linked properly, it creates a stronger, more efficient path for ai to understand the site's full value. This makes the information much more likely to be selected as the basis for ai-generated search results and answers.

Provide Programmatic Access via APIs and RSS

For technical SEO specialists and Software as a Service (SaaS) companies, the highest level of ai optimization is providing direct, programmatic access to your data.

The best way to do this is by offering Public-Facing APIs (Application Programming Interfaces). For resources like knowledge bases, product documentation, or dynamic datasets, an API allows an AI agent to query and retrieve information in a structured format and in real-time.

Also, using Structured RSS Feeds is a simple but effective technique. These feeds give ai a streamlined way to get real-time updates whenever you publish new content. Offering both APIs and structured feeds makes it easy for AI to access, understand, and use your information instantly.

Indicate Content Freshness Clearly

AI systems are biased towards fresh, up-to-date information, making content freshness a critical ranking factor.

For marketers to capitalize on this they should make content freshness explicitly clear to both human users and machines. First, ensure and display a clear "Last Updated" date on pages, particularly for the most important, "evergreen" content that is refreshed regularly.

Second, for a machine-readable signal, use Schema Markup (mentioned above) by incorporating the “dateModified” property.

This structured data tag provides a direct signal to ai crawlers and search engines that the page has been recently reviewed and updated.

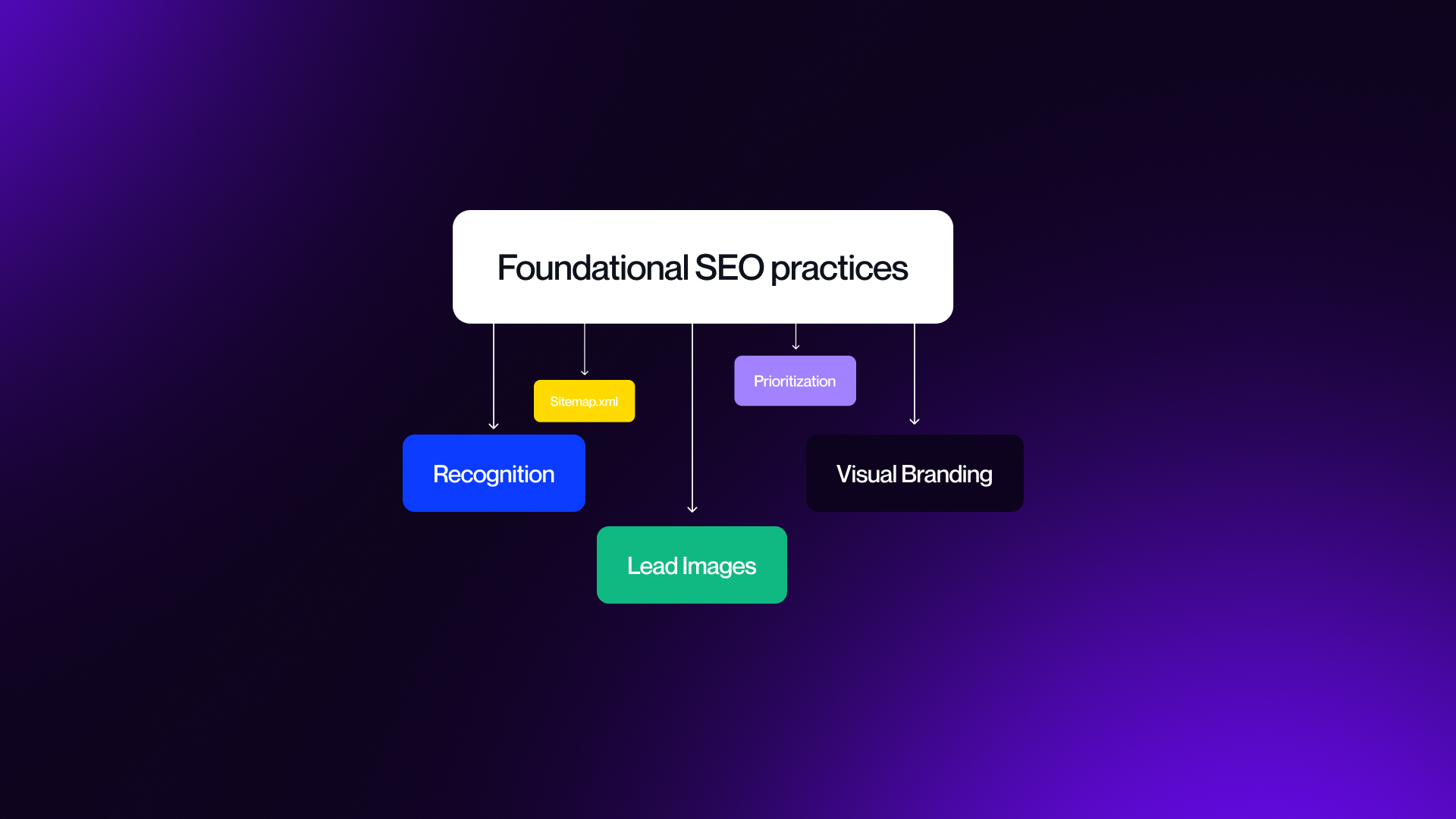

Don’t Forget Basics: Sitemap.xml, Favicon, Lead Images

The following practices are foundational SEO, but they carry new weight and sway.

1. Sitemap.xml for Prioritization

The sitemap.xml file remains the primary and most efficient method for telling all crawlers, including the new ai specific ones, which pages on your site are the most important and should be prioritized for indexing. Always ensure this is fully up-to-date to give ai the clearest possible map of your valuable content.

2. Visual Branding and Recognition

AI-generated search results are becoming increasingly visual. To make your brand stand out and build recognition, pay close attention to visual elements, like:

- Favicon - Use a clear and distinct favicon (the small icon next to the site title) so your brand is easily recognizable in visual AI result snippets.

- Lead Images - Every important page should have a compelling, well-optimized lead image. Crucially, ensure this image has proper alt text to provide AI with the necessary context, helping your content appear prominently in visual AI search results.

Major AI Crawlers You Need to Know in 2026

To be able to properly execute AI SEO, marketers need to understand the bots that are powering the new generation of search and generative AI. These crawlers are not all the same, they have different purposes and require different strategies.

GPTBot (OpenAI)

GPTBot is the web crawler from OpenAI. Its primary mission is to gather publicly available data to train and fine-tune OpenAI's large language models (LLMs), like the one behind ChatGPT.

Unlike traditional search engine crawlers that index content for search results, GPTBot collects information to deepen the ai's understanding of language and the world.

While blocking GPTBot might seem like a way to prevent your content from being used, it also means a brand won't show up in ai generated answers, a significant missed opportunity for ai search optimization and brand visibility.

Google-Extended and SGE Crawlers

Google has introduced a specific user-agent, the Google-Extended, that allows website publishers to manage whether their content can be used to train future Gemini models and provide content for Search Generative Experience (SGE) overviews.

This crawler operates alongside the standard Googlebot, which is still used for traditional search indexing. For ai SEO, allowing Google-Extended is crucial to ensure content is a potential source for the AI-powered summaries that are becoming more prominent in search results.

PerplexityBot

Perplexity AI, known for its conversational search engine, uses PerplexityBot. This crawler is designed to index web content specifically for its real-time answer generation, and it's particularly valuable for AI content optimization.

Perplexity's platform is known for providing clear citations and links to its sources, so allowing PerplexityBot to crawl your site can be a direct driver of referral traffic and brand authority. Unlike GPTBot, which is primarily for training, PerplexityBot is for real-time information retrieval for user queries.

Anthropic ClaudeBot

ClaudeBot is the web crawler for Anthropic, the company behind the ai assistant, Claude. Similar to GPTBot, its purpose is to collect training data for its LLMs.

The ethical considerations around ai and content are a major focus for Anthropic, and their crawler is part of their broader strategy for safe and helpful AI.

If your SEO strategy includes getting your content used in high-quality, ethical ai models, allowing ClaudeBot is a great choice.

AppleBot (AI Features Growing)

Apple's standard AppleBot has been used for Siri and Spotlight Suggestions for a long time now. Now the launch of "Apple Intelligence," a new crawler, Applebot-Extended, has popped up.

This new bot specifically evaluates content for training Apple's generative ai models. It's a key distinction marketers can allow the standard bot for search while disallowing the new one if you don't want your content used for AI training.

For brands targeting Apple users, optimizing for both crawlers is a must to secure visibility across the Apple ecosystem.

LLaMA-Based and Emerging Niche AI Crawlers

Beyond the major players, open-source models like Meta's LLaMA are enabling a new wave of niche ai agents and crawlers. These bots may have specialized purposes, such as gathering data for a specific industry or performing a unique function.

This means monitoring your server logs for new and unexpected user-agents and using a llms.txt file to manage their access as they emerge. The ability to quickly adapt to these new crawlers will be a key differentiator in the years to come.

This video from Bernard Marr breaks down the top ai trends in 2026, which are closely related to the crawlers that power them

AI-Powered SEO Tools Worth Using

The right tools are essential for a successful ai SEO strategy. The following platforms have adapted to the new AI-first landscape, helping you with everything from content creation to real-time monitoring.

Semrush AI Features

Semrush has integrated ai features across its platform to help with AI search optimization.

Key functionalities include its ai SEO Toolkit, which analyzes brand mentions in ai conversations to help marketers understand the visibility and brand sentiment in LLM-generated responses.

Marketers can also track how your content is performing in ai Overviews, providing crucial data for adapting your strategy.

Ahrefs for Technical AI SEO Insights

While known for backlinks, Ahrefs has also gotten into technical ai SEO. Its Site Audit tool flags issues that could stop ai crawlability, and its IndexNow integration can instantly notify AI chatbots of site changes.

Specifically, these features allow marketers to monitor both mentions of your brand and the overall sentiment (positive, negative, or neutral) when your content appears in ai-generated search results.

Surfer SEO and Clearscope (Semantic Optimization)

These tools analyze the top-ranking results for a given query to identify semantically related keywords, entities, and topics that an ai would expect to see in a high-quality article.

This ensures content isn't just keyword-stuffed, but is topically complete and well-structured, a key factor for an ai to cite your page.

Frase (Content and AI Alignment)

Frase is a tool built to align content with AI. It helps marketers with research, outlining, and writing content that is optimized for both search engines and generative ai platforms.

The platform analyzes competitor content and provides real-time recommendations, which then ensures your content has what's needed to serve as an authoritative source for ai-generated answers.

ChatGPT and Custom GPTs (Research, Outlines, Drafts)

If you are looking for a more hands-on approach, you can use ChatGPT and create custom GPTs.

It is not a dedicated SEO tool with real-time data, the value is in brainstorming content ideas, creating detailed content outlines, drafting initial content, and even generating Schema markup.

The key is to provide specific prompts to get the best output for your ai content optimization.

Conductor or ContentKing (Real-Time Monitoring)

Before SEO audits were a one-time event that took a chunk of time and was your very last source of effort. Now, with the speed of ai, you need real-time monitoring.

Tools like Conductor and ContentKing provide 24/7 surveillance of a site, alerting to critical SEO issues as they happen.

This is crucial for protecting visibility, as a small technical error can instantly make content not accessible to a live ai crawler.

How AI Is Reshaping Core SEO Practices

The core practices of SEO are not being replaced by ai they are being skyrocketed. AI is introducing a new level of precision and automation that allows marketers to move beyond best practices and into an SEO world of hyper-targeted, data-driven strategy.

Keyword Research in the Age of AI

Traditional keyword research was to find keywords with high search volume and low competition.

Now, ai has made it a qualitative one focused on user intent. AI marketing tools go beyond simple keyword suggestions, analyzing search behavior to predict trending keywords, identify the questions behind queries, and cluster related topics semantically.

This lets marketers target the concepts and entities that matter most to an AI, not just the keywords themselves. This is a big move from simply trying to rank for a term to becoming an authority on a topic.

On-Page SEO with AI Scoring

AI-powered on-page SEO tools, like Surfer SEO and Frase, are changing the optimization process.

Instead of guessing, now marketers get a real-time ai score that tells them exactly how well their content aligns with what the top-ranking pages and ai models expect to see.

These tools analyze competitor content to suggest topics you are missing, relevant questions, and proper keyword usage.

This data-driven approach removes the guesswork, ensuring your content is both beneficial for the user and machine-readable for the ai.

Technical SEO with AI Auditing

Technical SEO can be time-consuming and complex, but ai has been a game-changer.

AI-powered tools can crawl your website at unimaginable speeds, identifying and prioritizing technical errors that would take a human auditor weeks to find.

They can detect broken links, crawl errors, and performance issues in real time. These tools can predict how fixing an issue might impact rankings, allowing you to prioritize tasks based on their potential SEO value.

This predictive capability is invaluable for efficient resource allocation and a core part of an effective ai SEO strategy.

Backlink Prospecting with AI Discovery Tools

The process of backlink prospecting is being revolutionized by ai. New tools use machine learning to scour the web for high-quality, relevant websites that are perfect for outreach.

These tools go beyond simply finding sites with a high domain authority. They analyze the contextual relevance of a page, find the right contact person, and can even help create personalized outreach messages.

By automating the most time-consuming parts of link building, ai allows SEOs to focus on building meaningful relationships and securing high-value links.

Local SEO with AI-Powered Precision

AI has brought a new level of precision to local SEO. AI tools can analyze real-time data from local searches, pinpointing specific local keywords and content gaps.

For instance, they can use ai to optimize your Google Business Profile, manage customer reviews, and even post updates automatically.

This helps your business appear in the coveted "local pack" and get cited by ai for "near me" searches. The focus shifts from broad local signals to hyper-personalized, AI-driven local experiences.

For more insights on how to leverage these changes, this video offers a look into ten effective local SEO strategies.

Optimizing Content for AI Agents (Not Just Search Engines)

The biggest change in this space isn't just ai-driven search, it's the huge rise of ai agents.

These autonomous systems act on behalf of a user, performing multi-step tasks that go far beyond a single search query.

For a brand, this represents a new frontier for visibility, one that requires a different approach to content optimization.

What AI Agents Are and Why They Matter

An ai agent is a software system that uses AI to pursue goals and complete tasks on behalf of a user.

Traditional search engines that simply provide a list of links, an agent can reason, plan, and take action. A user might ask an agent to "plan a trip to Istanbul" or "find me the best Turkish coffee pot for under $50."

The agent then breaks down this complex request into smaller tasks, using its own tools and knowledge to find information, compare options, and even make a booking or purchase.

For a brand, this means you are no longer just competing for a click; you're vying to be the source that the agent chooses as the correct, most authoritative piece of information to complete its task.

How Agents Consume Content Differently Than Search

Traditional search engines primarily consume content by indexing pages for keywords and links. AI agents are much more sophisticated.

- Goal-Oriented - Agents read content with a specific objective in mind. They don't just passively index; they actively seek out the exact information they need to fulfill a user's request.

- API-First - While they can and do crawl web pages, many advanced agents prefer to access information programmatically through APIs. This gives them clean, structured data, which is far more reliable than parsing a web page's HTML.

- Contextual Understanding - An agent can understand the context of an entire conversation or task. It remembers previous steps and uses that context to find the most relevant information. This makes simple keyword-matching less effective and emphasizes the importance of providing comprehensive, context-rich content.

Formatting Content for Agents (Clean, Structured, API-Ready)

To win over AI agents, content needs to be both machine-readable and semantically rich.

- Clean, Semantic HTML - Use proper HTML tags (<H2>, <p>, <ul>) to create a clear document hierarchy. Avoid using excessive JavaScript for core content, as some agents may struggle to parse it.

- Structured Data - Use Schema.org markup to define what your content is about. For example, a page about a product should use the Product schema to define the name, price, and features. This is the clearest way to communicate with an agent.

- APIs and Feeds - For companies with dynamic data (product catalogs, knowledge bases), providing a public-facing API or a clean RSS feed is the ultimate form of ai optimization. It allows the agent to get the information it needs directly, without having to crawl and parse a website.

Preparing Your Brand for AI Summaries and Recommendations

AI agents will condense information from multiple sources to provide a single, concise recommendation. Your brand's success will depend on its ability to be chosen as a primary source.

- Be the Authority - Marketers need to create comprehensive, authoritative content on a single topic rather than a lot of fluffy articles. This makes your page a go-to source for the agent.

- Brand Story and Values - AI models are increasingly trained to understand brand identity and values. Ensure your "About Us," mission, and ethical stances are clearly articulated and consistent across your digital properties.

- User-Generated Content - Reviews, testimonials, and case studies are vital. Agents use this data to understand what real people think of your brand and product, which can heavily influence a recommendation.

- Consistency is Key - Ensure your brand's messaging, tone, and values are consistent across your website, social channels, and all other content. Vague, fluffy, or contradictory information will make it difficult for an agent to understand what your brand stands for, and you will become invisible to ai search optimization.

Extra Section: Ethical and Legal Considerations in AI SEO

As ai's role in content and search grows, so do the legal and ethical questions surrounding its use. For brands to build trust and maintain a sustainable ai SEO strategy. There is a cost to using ai generated content if it is not handled properly with care.

Content Ownership and AI Training

One of the most contentious issues is content ownership. AI models are trained on massive datasets scraped from the public web, including copyrighted materials.

This has led to lawsuits from news organizations, authors, and artists who claim that their work is being used without permission or compensation.

From a brand's perspective, this raises questions about the originality of AI-generated content and who, if anyone, holds the copyright for it.

Generally, courts and copyright offices have determined that a work must have a human author to be eligible for copyright protection.

To protect your brand, it's essential to:

- Verify Originality - Use plagiarism checkers to ensure any AI-assisted content is unique and not a direct copy of a source.

- Human Oversight - Ensure human expertise is the final arbiter of all published content. The more human input and editing, the stronger your claim to ownership.

Opt-Out vs. Opt-In: What Businesses Should Know

The legal framework for ai training is shifting from an opt-in to an opt-out model. In an opt-out system, ai crawlers are free to scrape your content for training unless you explicitly tell them not to.

This is the case with crawlers like Google-Extended and GPTBot, which marketers can block using your robots.txt or llms.txt file. Conversely, an opt-in model would require AI companies to get your permission before using your content.

The EU's Copyright in the Digital Single Market Directive provides an opt-out framework, and other regions are considering similar legislation. Your business should:

- Decide Your Stance - Determine whether you want your content used for AI training. Do you value the visibility, or do you want to protect your intellectual property?

- Configure Accordingly - Use your robots.txt and llms.txt files to enforce your decision. Blocking crawlers will make you invisible to AI training, but it may also hurt your chances of being a cited source in live AI search results.

Transparency in AI-Generated Content

Transparency is a main point of conversion when it comes to responsible ai. Users, consumers, and search engines want to know when any content is ai-generated or assisted.

Google, for example, has indicated that while AI-generated content isn't wholly bad, it must still meet its quality and helpfulness standards.

- Disclose Usage - Be transparent about your use of ai. This doesn't mean you have to slap a disclaimer on every paragraph, but it does mean acknowledging your use of the technology in a way that builds trust with your audience. *side note: These days, almost every content writer uses ai for research and as a writing assistant, this doesn't make your work less credible but be transparent about it.

- Explain the "Why" - Explain how AI is used to enhance your content, for example, by automating data analysis or generating a first draft that a human expert then polishes.

- Ethical AI Principles - Develop and publish your own internal guidelines for ai use, covering everything from bias mitigation to data privacy. This demonstrates a commitment to ethical practices.

Preparing for Voice and Multimodal AI Search

Let’s be real, search isn't just text-based. With smart speakers, in-car assistants, and smart devices, search is becoming voice-first and multimodal. This means it combines text, images, video, and audio.

Optimizing for this future is a key in any ai SEO strategy.

Optimizing for Voice-First Queries in AI Agents

Voice search queries are different from typed queries. They are longer, more conversational, and often include more context. For example, a user might type "best Italian food Toronto," but they might ask their smart speaker, "Hey Google, what's the best Italian restaurant near me right now?" This is what marketers need to do to get the most out of this aspect of ai SEO.

- Answer Questions Directly - Marketers need to structure content to provide a direct, concise answer (40-50 words) to a common question in the first paragraph of a section.

- Conversational Language - Use natural language in your headlines and body copy. For instance, a headline like "What is an ai SEO Strategy?" will have more effect for voice search than "ai SEO Strategy."

- Local SEO is Critical - Voice searches often have a strong local intent. Ensure your Google Business Profile is fully optimized with accurate information, and include local keywords and landmarks in your content to capture "near me" searches.

Image and Video SEO for AI Summaries

Generative ai models are becoming more multimodal, meaning they can analyze and understand visual content. This makes image and video SEO more important than ever.

- Descriptive Alt Text - Go beyond simple keywords. Marketers will need to write detailed, natural language alt text that accurately describes the image. An ai can use this to understand the context of an image and use it as a source. For example, instead of "espresso machine," use "A close-up shot of a stainless steel espresso machine with steam rising."

- Video Transcripts - Marketers need to provide an accurate transcript for every video published. AI crawlers can't “watch” a video, but they can read a transcript. This lets the ai to understand the entire content of your video and utilize it as a source for its summaries.

- High-Quality Visuals - Use high-resolution, original images and videos. An ai can detect the quality and originality of a visual asset, which can factor in whether it's chosen as a reliable source.

Structured Data for Multimodal Retrieval

Structured data, especially in the context of multimodal search, is the new frontier. It's how you tell an ai how your various content types are related.

- ImageObject and VideoObject Schema - Use these specific Schema.org markups to provide rich, machine-readable information about your visual assets. Marketers can define the image dimensions, file type, and a description.

- About and Mentions Properties - They allow marketers to define what an image or video is about and what other entities it mentions. This helps an ai connect your visual content to your broader digital knowledge graph.

- Unified Data Model - Use a consistent approach to structured data across all your content types (text, image, video). This helps the ai build a complete understanding of a brand and its offerings, which leads to more accurate and favorable citations in multimodal AI search results.

Why Growth-Focused Teams Choose Veza Digital for AI and SEO

For B2B, SaaS, and enterprise companies, ai-first search requires a partner that understands both the technical aspects and the strategic execution. Veza Digital combines this with a proven track record of delivering real, measurable results for ai-driven bunsieness growth.

Enterprise-Ready SEO with AI in Mind

Enterprise-level SEO absolutely needs scale and precision, and our team of experts is able to handle the complexities of large marketing websites, including intricate Webflow migrations and full SEO audits. The approach we take is not about a quick fix, it's about building a long-term ai SEO strategy that can handle millions of pages and thousands of keywords.

Our team understands how to integrate ai tools and processes in a way that provides a competitive advantage without sacrificing brand integrity or authority. The strategies we implement are designed to ensure that your content is not only visible but also trusted and cited by ai models and search engines.

Experience Migrating Brands into AI-Friendly Webflow Sites

Webflow is a unique platform that offers a powerful and clean foundation for aI optimization.

Unlike outdated platforms or ones that rely on bloated code and plugins, Webflow's structure is fast, responsive, and semantically solid.

Our Webflow experts have serious experience migrating enterprise websites from platforms like WordPress to Webflow, ensuring a seamless transition that protects your SEO rankings and sets you up for success in the ai-first era. Our migration process includes a comprehensive technical SEO audit to ensure your new site is perfectly configured for ai crawlers.

AI and SEO Strategy, Not Just Tools

Everyone and anyone can use an ai tool, but a solid ai SEO strategy requires a deep understanding of how to actually use them for growth. We have moved beyond simply using ai for content generation. We focus on the human-needed ai-enhanced approach that uses ai for:

- Market Intelligence - Identifying new trends and content gaps that competitors are missing.

- Content Auditing - Analyzing massive content libraries to find opportunities for consolidation or optimization.

- Technical Auditing - Quickly diagnosing and prioritizing technical issues that impact AI crawlability.

- Real-time Monitoring - Tracking brand mentions and citations in live AI search results.

This strategic oversight ensures that AI is a tool to amplify your expertise, not a crutch that replaces it.

Transparent, Growth-Driven Results

Veza Digital is committed to transparency. We don't hide behind fast words and vague promises. Our reporting is clear, focused on the metrics that matter, and directly tied to your business goals.

We measure success not just in traffic, but in citation share, lead quality, and real ROI. We use a data-driven approach to prove the value of our work, ensuring you always know how your ai search optimization boosts your bottom line.

Our goal is to be a true partner in your growth, helping you dominate in your industry with an ai-first SEO strategy that delivers long-term results.

FAQs About AI and SEO

What is AI SEO?

AI SEO is a new approach to search engine optimization that leverages artificial intelligence to enhance and automate key strategies. Instead of just targeting keywords for a ranked list, AI SEO focuses on making content understandable and trustworthy for both traditional search engines and ai driven systems. It uses machine learning to analyze user intent, structure content for machine readability, and identify patterns that would be impossible for a human to find manually.

Is SEO dead in the age of AI?

No, SEO is not dead, it's evolving. The old-school, keyword-stuffing tactics are dying, but the core purpose of SEO, making information findable and understandable, is more important than ever. The focus has shifted from winning a click to becoming a trusted source that an AI will cite in a generative answer. The future of SEO with ai is about quality, authority, and adapting to new technologies.

What is llms.txt and do I need it?

The llms.txt file is becoming the standard designed to give large language models (LLMs) and AI agents a structured, prioritized map of your website's content. While robots.txt tells crawlers what they can't access, llms.txt tells them what your most important content is. You don't technically need it yet, but setting it up is a proactive step that positions your brand as a clear, authoritative source for AI-driven systems.

Can AI agents replace Google search?

AI agents will not replace Google search entirely. Instead, they will coexist and evolve alongside it. Google is already integrating ai with features like SGE (Search Generative Experience), and other ai agents like Perplexity are providing a conversational, direct-answer experience. The future is likely a hybrid model where users choose between a traditional search and an ai-powered agent, depending on the complexity of their task.

How do I know if my content is visible to AI?

To know if your content is visible to AI, check your server logs for the user agents of major AI crawlers like Google-Extended, GPTBot, and PerplexityBot. You can also monitor your brand's presence in AI-generated answers. Additionally, tools from Semrush and Ahrefs are developing features that track how your content is being cited in AI overviews and conversational searches, which is the ultimate measure of visibility.

What are the best AI SEO tools in 2026?

The best AI SEO tools in 2026 are those that move beyond simple content generation to provide deep insights and automation. We recommend:

- Semrush & Ahrefs - Enterprise-level technical audits and competitor analysis.

- Surfer SEO & Clearscope - Semantic content optimization and topical authority.

- Frase - Building comprehensive content briefs and aligning content with AI.

- Conductor & ContentKing - Real-time monitoring and proactive issue detection.

How do I optimize for Perplexity or ChatGPT search?

To optimize for Perplexity or ChatGPT, you need to provide clear, direct answers to questions in a conversational tone. Focus on these points:

- Direct Answers: Start your content sections with a clear answer to a common question.

- Conversational Tone: Write naturally, as if you're talking to a friend.

- Structured Data: Use Schema markup to give these systems clean, machine-readable data.

- Topical Authority: Create comprehensive guides that are the single source of truth on a topic.

Will link building still matter for AI SEO?

Yes, link building still matters. AI models, just like traditional search engines, rely on external signals of authority and trustworthiness. A backlink from a reputable, relevant source serves as a strong vote of confidence. AI agents use these signals to decide which sources are the most reliable to cite. The focus, however, is on acquiring high-quality links rather than low-quality ones.

How do I stop AI crawlers if I don’t want my content used?

To stop AI crawlers from using your content for training, you can use your robots.txt file. You will need to add a "Disallow" rule for the specific user agents you want to block. For example, to block OpenAI's GPTBot, you would add User-agent: GPTBot followed by Disallow: /. Be aware that doing this may prevent your content from appearing in AI-generated search results and overviews.

Key Takeaways

- SEO isn’t dead, it’s evolving with ai. The shift is from a keyword-driven, list-based search to a concept-driven, conversational ecosystem. The goal is to move from winning a click to becoming a trusted source that an ai will cite.

- Optimizing for ai requires metadata, structure, speed, and access. Websites need to be a machine-readable source of real information. This involves using clean, semantic HTML, rich structured data, and an AI-friendly robots.txt and llms.txt.

- AI agents are a new distribution channel for content. The future of search goes beyond Google. AI agents and assistants are acting on behalf of users, and your brand needs to be the definitive source they choose to complete a task.

- Teams that adapt now will have visibility in 2026. Remember, this is not a trend and proactive brands that embrace and work with ai search optimization will have a lasting advantage.

Ready to get ahead of the curve?

Our Webflow + SEO expertise ensures your website not only looks phenomenal and is functional but also technically optimized for the now ai-first world. We help growth-focused teams develop and execute an AI SEO strategy that drives real results.

Contact Veza Digital to future-proof your SEO strategy!

.jpeg)